Блог:ROSA Planet

A technical blog of ROSA Laboratory.

All content is published under Creative Commons Attribution-ShareAlike 3.0 License (CC-BY-SA)

Please, subscribe to RSS/Atom feed. If you have any questions do not hesitate to contact us

WiFi and Broadcom - Handling the Errors

Two bugs related to error handling have been fixed by our developers in the proprietary driver for Broadcom WiFi adapters (broadcom-wl, a.k.a. broadcom-sta, a.k.a. wl). Both problems (#2146, #2667) lead to kernel crashes at boot time on the laptops of several our users.

By the way, not all major Linux distributions have these problems fixed at the time of writing.

LinuxCon Europe 2013 - About Data Races in the Linux Kernel

October 22, 2013 - Eugene Shatokhin, one of our developers, gave a talk devoted to data races in the Linux kernel modules at LinuxCon Europe (slides, notes for the slides).

Such errors could be very hard to find and their consequences may vary from negligible to critical. Hunting the races down is especially important for the Linux kernel, where, for example, the code of the drivers can be executed by many threads at the same time. Now add interrupts and other asynchronous events and remember that the synchronization rules for the data are not always fully described (if described at all)...

Most of the talk was about the tools that can detect data races in the Linux kernel. KernelStrider and RaceHound tools, Eugene was one of the main developers of, were covered in more detail.

KernelStrider collects data about the operation of the kernel component (e.g. a driver) under analysis in runtime. The information about memory accesses, allocations and deallocations, locks and unlocks, etc., is then analyzed in the use space by ThreadSanitizer (Google). The algorithm of searching for races is briefly described here.

KernelStrider may issue false alarms in some cases. For example, false alarms happen when a network driver turns off interrupts in hardware and then accesses some common data without the risk of conflicts with the interrupt handlers.

RaceHound tool allows to check the warnings about data races issued by KernelStrider and find real races among them. RaceHound works as follows.

- A software breakpoint is placed on an instruction in the binary code of the driver that may be involved in a data race.

- When the breakpoint triggers, RaceHound determines the address of the memory area which is about to be accessed by the instruction. Then it places a hardware breakpoint to track the accesses of the needed kinds (writes only or both reads and writes) to that memory area.

- A small delay is made before the execution of the instruction.

- If some other thread accesses that memory area during the delay, the hardware breakpoint will trigger and RaceHound will report a race.

That is, KernelStrider plays the part of a "detective" or an "analyst" here and narrows the range of "suspects" - the fragments of the code possibly involved in data races. RaceHound is then a covert monitoring system tracking these suspects. If it catches a suspect "red-handed", everything is clear, the "crime" (data race) is confirmed.

There were many questions asked both during the talk and after it. Among other things, the audience was interested in the following.

- Plans to support ARM (the mentioned tools currently work on x86 only) - may be later, not in the near future.

- Situations when KernelStrider misses data races - yes, this is possible in some cases, mostly due to how ThreadSanitizer works as well as due to sometimes inaccurate event ordering rules used.

- Support for suspend/resume in KernelStrider - yes, KernelStrider operates during suspend and resume too.

- Support for analysis of the kernel proper rather than the modules in KernelStrider and RaceHound - not implemented at the moment.

- Instrumentation of the code to be analyzed during the compilation rather than during its loading as KernelStrider does now - may be beneficial, it is actually one of the future directions of the development.

- and so on.

The developers from Intel actively participated in the discussion of the races found by the tools mentioned above. It is no surprise because these races were found in the network driver e1000, created by Intel. A strange thing became obvious during that discussion: it is a common practice in the network drivers not to use synchronization in some cases even if a race may happen as a result (and the races were actually found there). This is the case, for example, for NAPI and some of the functions involved in data transmission. This is probably to avoid performance losses due to locking but the estimates of such losses as well as the guidelines how to avoid problems there are yet to be found.

It seems that many kernel developers share the following attitude to the data races:

- Have you observed any particular problems due to that race? Has anything crashed or otherwise worked wrong?

- Not yet.

- Oh, well.

And nothing happens then.

Reasonable? Perhaps, but if one remembers this article, for example, the reason becomes less certain.

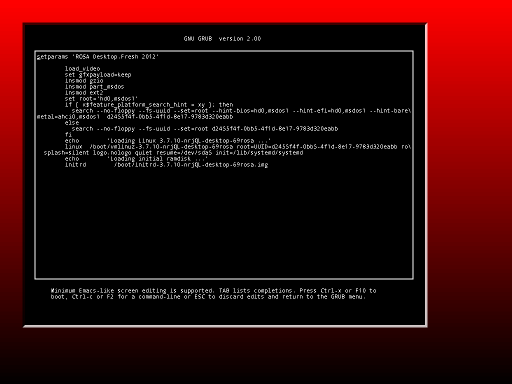

More GRUB2 upgrades and bugfixes

Out developers continue upgrading the GRUB2 bootloader.

There were 14 patches made and token into the upstream.

Updates Builder – Pull Requests and automatic correction of build failures

Repositories of ROSA Desktop Fresh R1 contain more than 15,000 packages that can satisfy needs of almost every user. However it's not easy to maintain such a large set of applications, libraries and other software components. The thing is that new versions of many programs are released very frequently and maintainers have to constantly track them, build for ROSA, test and decide if it makes sense to update ROSA package to a newer version. To automate these tasks, we use the Updates Builder tool that constantly monitors upstream and automatically builds new versions in ABF.

Initially, the way of Updates Builder work was pretty simple – to build a new package version, it used a spec file from the previous one with replaced version value and tarball name. This approach turned to be very effective – it is common for newer versions of many packages to contain only minimalistic changes with respect to the previous ones, so there is no need in serious spec file modifications to build a new package. As a result, during the first three months of Updates Builder usage we have updated several hundreds of packages by its means. Нowever, during these three months we discovered more ways to improve effectiveness of the tool.

It turned out that new versions of package often fail to build due to trivial issues – for example, new version has a couple of new files not mentioned in spec, require new BuildRequires entries and so on. These changes lead to build failures but can be fixed by small modifications of the spec. So why not to teach the tool to automatically apply typical modifications?

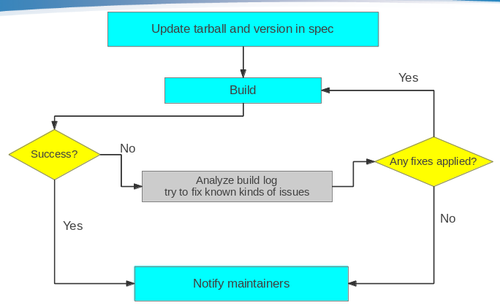

To implement such a feature, we have developed special scripts to analyze errors occurred during the package build. The scripts are launched automatically in case when a build triggered by Updates Builder fails. If the scripts detect one of the errors known to them, they automatically fix the spec file to avoid these errors and rebuild the package once again. This process can be repeated several times, since as old errors disappears, the new ones are introduced. Finally, Updates Builder workflow in ROSA looks like the following:

Currently the scripts try to fix the following kinds of errors:

- Unneeded patches

- Reverse (or previously applied) patch detected

- Missing build requirements on Perl modules

- Can't locate <perl_module> in @INC

- Added or removed files:

- File not found / File not found by glob

- Missing file specified in %doc

- Installed (but unpackaged) file(s) found

- Rpmlint errors

- debuginfo-without-sources and empty-debuginfo-package

Note that different heuristics are used to fix such issues and we can't guarantee that the fixes applied are always correct, even if the package was successfully built after them. For example, to avoid debuginfo-without-sources and empty-debuginfo-package errors we currently disable debug package creation. However, it could be more correct to mark the package as architecture-independent (noarch) or add some build and compiler flags without which debug information is not generated. So before merging updates suggested by Updates Builder to official repositories maintainers should first analyze the changes applied; it is possible that some additional corrections should be made.

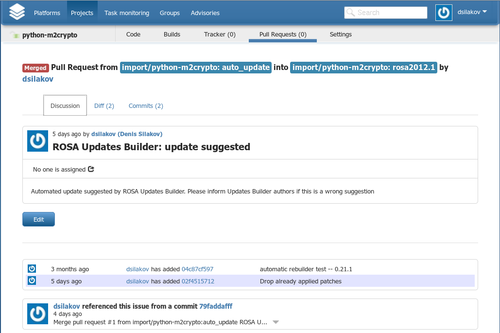

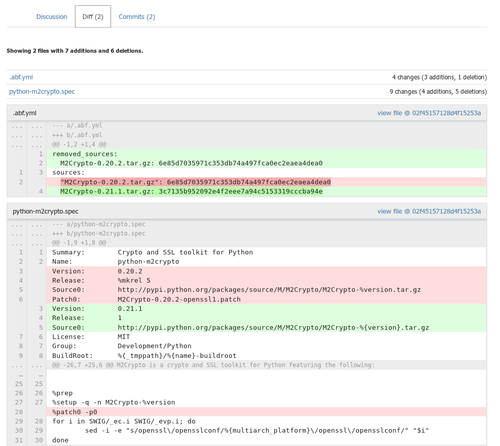

By the way, the process of analyzing updates suggested by Updates Builder and merging them to official repositories gas become much more convenient. Up to now, Updates Builder only sent emails with build results and then maintainers had to manually investigate changes in the auto_update branch of Git repository and merge these changes to official branches. From now on, in case of successful build of a new package version a Pull Request is automatically created in ABF. Maintainers are able to visually analyze all modifications and accept them by means of a single button.

Urpmi, rpmdrake and automated dependency resolution

The specific of ROSA repositories (as well as of repositories of many other Linux-based systems) is that dependencies of some packages can be resolved in multiple ways - since sometimes there are several packages providing the same feature. For clarity, let's look at example.

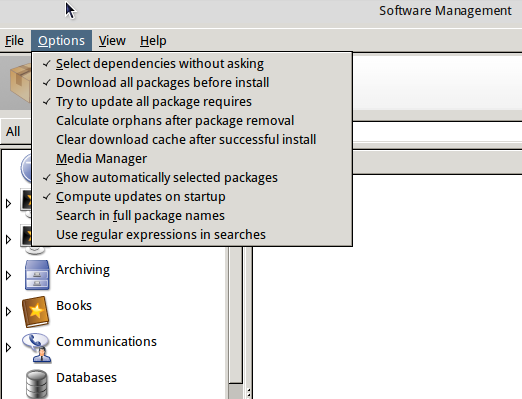

We have a Tesseract OCR in our repositories which requires language-specific packs to work with certain languages. Tesseract currently supports more than 70 languages and for every language a separate data package exists in our repositories. But it is unlikely that user will need all these languages. For most users, it is enough to provide support for their native language. So then installing tesseract, we should somehow decide which language packs to install. To indicate that we have a choice, the following trick is performed on the package level: the tesseract package itself requires tesseract-language, and every package with language-specific data provides tesseract-language. When installing tesseract, urpmi (or Rpmdrake) detects that tesseract-language requirement can be resolved in multiple ways. In f this is the case, urpmi and Rpmdrake either ask user for the choice or perform selection automatically (if --auto or corresponding checkbox in Rpmdrake settings is specified).

By the way, in the recent versions automatic dependency selection in Rpmdrake is turned on by default.

GRUB2: new options - terminal window size and position

Our developers continue to improve GRUB.

This time they have made new options. Now you can set terminal window size and position in the theme's file.

Также добавлена возможность изменять рамку терминала - пустое пространство, оставляемое со всех сторон в окне терминала. Also you can change terminal border width. (free space from each side inside the terminal window)

terminal-left: "50" terminal-top: "50" terminal-width: "800" terminal-height: "600" terminal-border: "10"

The patch has been approved by the upstream and added to the source code.

ROSA Planet gets rolling release

"ROSA Planet" switches to rolling release scheme

Our regular readers should remember our Technical bulletin called "ROSA Planet", aimed to readers with good technical and IT skills. Surely it was good, but: it was the old-fashion magazine format, it had one-month release schedule and it was wrapped in PDF. Those things are not compatible with modern fast, mobile and super cool life style.

Good news everyone!

We are switching to "ROSA Planet 2.0" which will be almost as rolling release in the way that new articles will appear right after they are ready, always keeping fresh and cool. In other words — this is classical blog, which you may read in a chronological, sequential or calendar mode. You may also subscribe to the Atom RSS here http://wiki.rosalab.ru/en/index.php?title=Blog:ROSA_Planet&feed=atom

You may easily navigate to the blog by clicking the link ROSA Planet on the left side of the main page of our wiki.

And lest you miss the most interesting articles, once in a month we'll be releasing a special digest issue with additional PDF file to those users who prefer reading it offline.

Happy reading!

And do not hesitate to use good old-fashion e-mail mode to contact us at info@rosalab.ru.

GRUB2: improving of option and bugfixing

Our developers continue to improve GRUB2. Some bugs have been found and fixed. Also one option have been improved. Patches were sent to the upstream and accepted.

The first complete theme tutorial for GRUB2

Our developer has written the first complete theme tutorial for GRUB2.

This tutorial is step-by-step detailed description of all graphical theme creation stages. Every option is listed with description of it's purpose, possibilities, limitations and features. The material contains examples of theme files and screenshots of the results.

You can make your own theme with precision up to a pixel with formulas and schemes given in the tutorial.

This tutorial is suitable for both beginners and those who had deal with GRUB2 themes.

You can see this tutorial at our wiki. Also this link can be found at the official GRUB2 documentation site.

PkgDiff 1.6 - Added compatibility check

PkgDiff tool is developed to visualize changes in files and attributes of all types of Linux software packages and is intended to be used by maintainers and QA-engineers in order to verify changes and prevent unintentional changes, which can break other packages in the repository. One of the most significant elements in the structure of the Linux distribution are system libraries. Average Linux distribution contains several thousand libraries and they have a huge number of internal dependencies. For this reason, an update of a library can break the build or behavior of other libraries and eventually lead to malfunction of user applications.

In the new version of the PkgDiff tool (1.6) we have added the ability to check the compatibility of changes in libraries. This was made possible due to a new ABI Dumper tool, which can extract information about the library ABI from the debug-files, which can then be analyzed by the ABI Compliance Checker tool. To check the compatibility of two packages A and B (old and new versions of a package), the user should pass appropriate debug-packages to the tool and run it with the additional -details option:

pkgdiff -old A-debuginfo.rpm -new B-debuginfo.rpm -details

We have added the new ABI Status section to the output report, which shows the backward compatibility level of the library ABI. To view detailed compatibility reports one can find the Debug Info Files table in the report and follow the links in the Detailed Report column.

ABI Dumper - A tool to dump ABI from DWARF debug-info of an ELF-object

When you compile ELF-object, such as shared library or kernel module, with the additional -g option, the debug information is inserted in this object. This information is typically used by the standard debugger gdb to provide the user with additional features when debugging the program. One can read this debug-info with the help of -debug-dump option of the readelf or eu-readelf utility (from elfutils package).

An important part of any ELF-object is its binary interface (ABI), provided for its client applications to use. In essence, it is a representation of the object's API on the binary level (after compilation). When you update the object in the distribution, it is important to maintain ABI backwards-compatible, otherwise it may cause a malfunction or crash of applications. Changes in the ABI are usually caused by corresponding changes in the API of an object or by changes in the configuration and compilation options. To track changes in the ABI of an object we use the ABI Compliance Checker tool. But until now it could only analyze shared libraries by extracting information from header files.

In order to expand and simplify usage of the ABI Compliance Checker tool, we use ABI Dumper tool for extracting ABI information from the debug-info of an object. Now, with the help of this tool one can track changes not only in the ABI of libraries, but also, for example, in the ABI of kernel modules. A typical use case is to create ABI dumps for the old and the new version of an object:

abi-dumper libtest.so.0 -o ABIv0.dump abi-dumper libtest.so.1 -o ABIv1.dump

and then compare them:

abi-compliance-checker -l libtest -old ABIv0.dump -new ABIv1.dump

Unfortunately, this approach has its drawbacks. Perhaps the main drawback is the inability to perform some compatibility checks. For example, there is no possibility to check for changes in the values of the constants (defines as well as const global data), since their values are inlined at compile time, and not presented in the debug information of the binary ELF-object. In general, there can be checked about 98% of all compatibility rules. Another disadvantage is the long time required to analyze large objects bigger than 50 mb. But one can use the dwz utility to compress input debug-info.

Packaging-tools - useful tools for maintainers

In ROSA is available packaging-tools — set of scripts for maintainers, which was firstly developed in Ark Linux.

Set includes spec-files generator for arbitrary packages — vs — which creates a blank of spec-file and opens it in vim (or in editor, which was specified in variables EDITOR or VISUAL). Also are available special generators for spec-files of packages specific types:

- vl

- for libraries

- vp

- for modules Perl

- vj

- for Java-packages

These generators create blacks of spec-files, which take into account specific of every concrete type of package (for example, nessesary subpackages are created for libraries).

Another useful script is e, a simple wrapper for gendiff. If you want to prepare patch for some package, you need to unzip archive with source code and edit nessesary files with e. In fact, this script will call an outside editor (specified in variables EDITOR or VISUAL; default is vim), but before it happend, it will save source file with suffix rosa2012.1~ (suffix can be edited with option -s). As soon as you have finished, cause gendiff to create patch.

This is an example, how you can prepare patch for file test.c for source code someapp-1.2.3 with editor geany

$ tar xzvf someapp-1.2.3.tar.xz $ cd someapp-1.2.3 $ export EDITOR=geany $ e test.c $ cd .. $ gendiff someapp-1.2.3 .rosa2012.1~ >my.patch

This way seems to be a bit complicated, but it is really suitable, if you need to prepare small patch for large source code file.

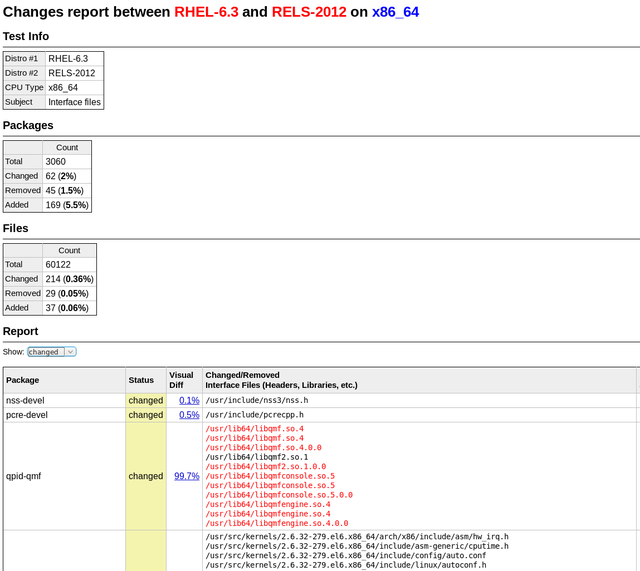

DistDiff - visualizing changes in Linux Distros

It isn't really easy to release stable updates for Linux distribution. You have to check, that all applications will work properly after update. They can be divided into two big groups: basic (from distribution) and personal (installed by user from other sources). Basic apps could be easily checked by installing them into updated distribution. Then they are checked manually. But we don't know anything about applications from second group. So, you have to not only check efficiency of them, but have to watch list of all changes in packages.

Compatibility of changes in system libraries can be checked with tool ABI Compliance Checker. To check changes in other packages we have developed tool DistDiff. It helps visualize changes in all packages of distribution and quickly look through them for violations of the compatibility. You have to input into tool only two directories: with old and new packages. In default mode tool checks changes in interface files (libraries, modules, scripts and others), which can potentially affect on compatibility. It can also check all files with option "-all-files".

It is based on tool we have developed earlier PkgDiff. It was also developed to visualize and compare changes in packages.

GRUB2 - memory leakage and lot bugs in progress bars fixed

Our developers tends to increase functionality and upgrade any program they deal with. Thats why they have created 5 more updates for the GRUB2 bootloader. Patches were sent to the GRUB2 upstream and accepted.

MagOS Linux based on ROSA Marathon

It is nice to have ROSA on your own machine, but sometimes many of us meet the situation when it is necessary to work on other’s computers where you can’t change the operating system. In many cases LiveCD will help, and ROSA provides possibility to boot into Live mode from flash or CD/DVD. However, though been very useful in many aspects, the Live mode suffers at least from the following issues:

- you can save results of your work on a hard drive of computer, but it is not easy to save them of the flash drive from which you have booted;

- there is no possibility ti change system settings, save them and use during the next boot;

- finally, to burn ISO image on flash and boot into the Live mode, you should preliminary move all files from the flash to somewhere. Even if you have a flash with dozens of Gb of free space and ROSA iso image only needs 1.5 Gb.

All these disadvantage are absent in MagOS Linux. Traditionally, MagOS was based on Mandriva, and some time ago ROSA-based variation was introduced.

Distribution builds are available here: http://magos.sibsau.ru/repository/dist/. The build based on ROSA Marathon has 2012lts suffix (at the moment of writing this paper, the latest build was MagOS_2012lts_20130228.tar.gz).

The build is actually a tarball with three folders — boot, MagOS, MagOS-Data. To install MagOS on your flash drive, you should unpack the tarball and place these three folders in the root of your flash. There is no need to remove existing data from flash, but remember that the system needs about 2Gb of space.

Note that it is necessary to mount flash without 'noexec' option., to be able to launch scripts directly from it (this is required to make a flash with MagOS bootable). ROSA uses 'noexec' option by default, so you will have to mount your flash stick manually from the command line.

In order to do this, launch console with root privileges (e.g., in KDE press Alt-F2 and «kdesu konsole») and execute the following command:

mount -o remount,exec /dev/sdx /mount_point

(here /dev/sdbx is a device file corresponding to your flash drive, /mount_point is a directory where the flash is mounted).

Or just remount you flash stick:

mount -o remount,exec /dev/sdb1 /mount_point

Now copy boot, MagOS, MagOS-Data and folders to /mount_point, go to /mount_point/boot/syslinux folder and launch install.lin.

You will be asked if you are sure to make the device bootable. To agree, press enter.

Now leave the /mount_point folder and unmount the flash by 'umount /mount_point' command.

Now reboot your machine and boot from flash. You should see MagOS boot selection menu with several boot options.

The system is based on ROSA, but by default a standard KDE is used, without SimpleWelcome and RocketBar. Besides KDE, one can load Gnome or LXDE; to do this, logout and choose appropriate session type in the menu at the bottom of the screen. Remember that default user/password are user/magos.

MagOs Linux can be installed on flash not only in Linux, but in Windows, as well. It is also possible to install the system on a usual machine — either as a standalone system or inside existing Windows partition.

More details can be found at MagOS wiki (http://www.magos-linux.ru/dwiki/doku.php), but unfortunately for our foreign users, all documentation is in Russian. However, MagOS Linux is very easy to use, in most cases you won’t need any additional documentation at all. And for getting started, the documentation on wiki should be enough — though the text is in Russian, the commands to be executed should be clear for any user. Also note that the thing you would likely want to do after the boot first is to change locale of the system, which is set to Russian by default. To do this, just go to KDE CC, find locale settings and choose English (this option is available by default; to choose other languages, you will have to install appropriate localization packages — at least kde-l10n ones).

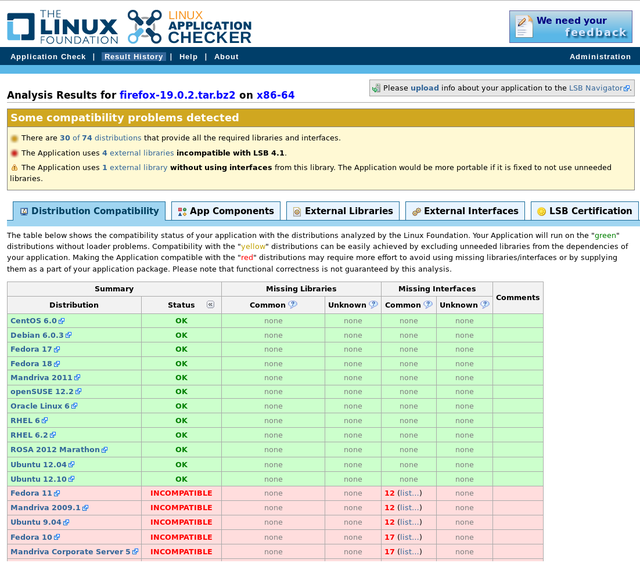

ROSA Marathon is included in the Linux Application Checker knowledge base

The Linux Foundation consortium has announced an updated version (4.1.8) of the Linux Application Checker (AppChecker) — a tool aimed to analyze compatibility of applications with different Linux distributions and check their compliance with Linux Standard Base (LSB). Currently AppChecker database for the x86 platform contains data about 84 distributions, now with ROSA 2012 Marathon among them. We are going to continue our collaboration with Linux Foundation engineers and provide them with necessary information about ROSA releases.

AppChecker analyzes compatibility of application with particular distribution by comparing a set of shared libraries and binary symbols required by application with sets of libraries and symbols provided by the system. Satisfaction of such requirements is a necessary condition of successful application launching in the operating system — if some library or symbol is absent, then application cannot be launched in the distribution. The list of libraries and binary symbols required for the application is obtained by analyzing application binary executables (in ELF format) and shared libraries. To be sure, only those requirements are taken into account that are not satisfied by libraries of the application itself. AppChecker actually emulates work of the system loader during application launch; if some required libraries or symbols are missing in the system, the application will simply fail to start (or will silently fail during its work, if lazy binding is used).

One should note that AppChecker contains data only about limited set of widespread libraries, not about all those that exist in distribution repositories. More precisely, it is guaranteed that the information is correct for libraries from the 'approved' list (http://linuxbase.org/navigator/browse/rawlib.php?cmd=display-approved) which currently contains almost 1,500 entries, while repositories of most distributions (in partiular, ROSA) contains several thousands of libraries. If application requires library not included in the approved list, AppChecker will honestly report that it can’t say if this library is present in certain distributions or not.

As an example usage, one can see that the Firefox build downloaded from http://mozilla.org (of the version 19.0.2 at the moment of writing this article) cannot be used in old systems such as Fedora 10 or Ubuntu 9.04.

ROSA tools in upstream

While creating ROSA, we not only develop and adapt different packages for our distribution, but also design new tools for other developers.

One of such tools is API Sanity Checker, which is aimed to automatically generate tests for C++/C libraries.

For its work, the tool requires only header files with declarations of library functions (and all necessary data types). Using this information, API Sanity Checker generates tests that calls every function from the library with proper arguments. Usually these automatically generated tests are used as a template for developing more complex sets (with enumeration of different values of parameters, their combinations, etc).

The tool is absolutely free (the source code can be found here) and can be used by everybody. For example, not so far API Sanity Checker has been integrated in the development cycle of the GammaLib library. As a result of the tool's work, 11 errors were found and fixed in that library (https://cta-jenkins.irap.omp.eu/job/gammalib-sanity/).

We recommend all upstream developers of different C/C++ libraries to follow this successful example. The resources to create test set is minimal, but number of errors found can be really great.

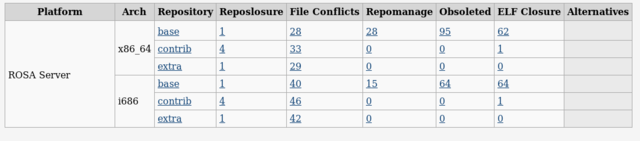

Monitoring of RELS Repositories

As many of you likely know, ROSA repositories are subjected to constant monitoring aimed to detect potential problems in the package base. For a long time, regularly updated results of such monitoring for ROSA Desktop series have been publicly available at http://fba.rosalinux.ru (by the way, we have recently added one more kind of reports — «File Conflicts» — that reflects packages containing the same files but not explicitly marked as conflicting using the Conflicts tag).

But Desktop is not the only direction of ROSA development; another important member of ROSA OS family is ROSA Enterprise Linux Server (RELS). Currently the same kinds of reports are available for RLES and ROSA Desktop except the Alternatives analysis (which is currently provided for ROSA Desktop only, though we plan to add it for RELS in the near future, as well).

As one can see from the report table, RELS package base is in a really good shape — typical numbers for such reports are dozens or even hundreds of problems, while the number of potentially problematic packages in RELS is close to zero.

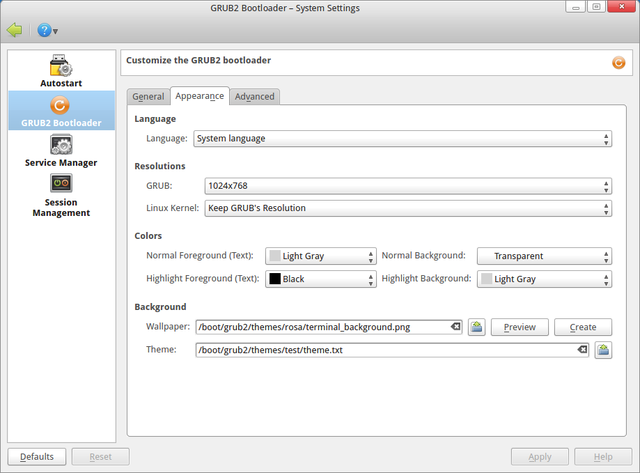

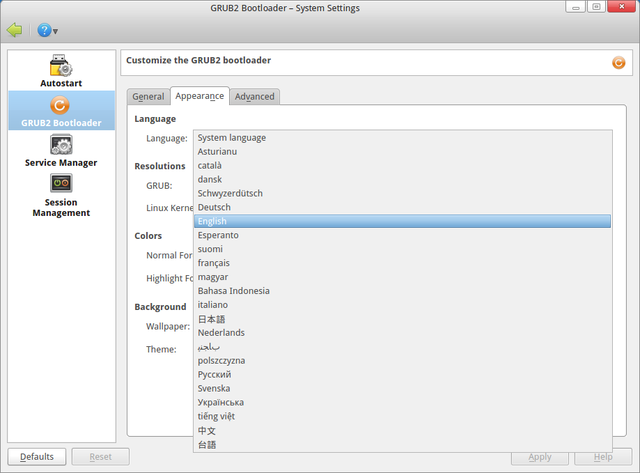

kcm-grub2 contribution — selecting bootloaders language

Our developers have created the update for the GRUB2 manager (kcm-grub2). New option for selecting bootloader's language has been added. It is useful in cases when user needs to set language of the bootloader not equal to system's language.

"System language" can be selected and then behavior of the GRUB2 manager as well as behavior of the script for renewing bootloader's configuration (update-grub2) will be usual: GRUB2 language will be the same as system's language.

Moreover, specific language can be selected, e.g. "English" or "Русский". Then as result of configuration update (by saving new configuration using GRUB2 manager or calling script update-grub2) we will see GRUB2 menu in selected language.

The patch, which adds option to choose bootloader's language, was sent to the upstream and will be embedded in next version of the program.

Command-not-found

You can often see a message «bash: foo: command not found». And you surely want to know why. For example, necessary package is not installed or there is just a misprint. Probably many users become confused after this:

$ rpmbuild bash: rpmbuild: command not found $ sudo urpmi rpmbuild No package named rpmbuild The following packages contain rpmbuild: java-rpmbuild, rpmbuildupdate You should use "-a" to use all of them

For such cases “command-not-found” tool has been created for ROSA! There are similar tools in other distributions, but there was no such tool for ROSA/Mandriva up to now. But from now on, all you need is to install command-not-found package and open new terminal. Try to type something weird:

$ foo No command 'foo' found, did you mean: Command 'fio' from package 'fio' (contrib) Command 'fop' from package 'fop' (main, installed) Command 'for' from package 'execline' (contrib) Command 'zoo' from package 'zoo' (restricted)

Perfect! I just wanted to call “zoo”, I made a mistake. (By the way, notice that “fop” package is already installed, but now we don’t need it)

$ zoo Command 'zoo' can be found in: package 'zoo' (restricted) You can install it by typing: urpmi zoo Do you want to install it? (y/N)

All you need is to type “y”. You don’t want to receive an offer to install a package? Set environment variable “COMMAND_NOT_FOUND_TURN_OFF_INSTALL_PROMPT=1”, and there will be no stupid questions.

It should be mentioned that when you run the program without the help of TTY, it won’t execute any check, it will just write “command not found” like bash itself. Also whatever command-not-found writes, it will exit with code 127, as bash does in such cases.

One more command-not-found feature is analysis of installed packages. If you type

$ ifconfig Command 'ifconfig' can be found in: package 'net-tools' (main, installed) File /sbin/ifconfig exists! Check your PATH variable, or call it using an absolute path.

Also “cnf” utility presents in command-not-found. It allows doing everything that is described above (in fact, it is executed every time bash fails to run command). In other words, "cnf foo" will give you the same output, as when you type “foo” in console. You can use “cnf” to learn from which package an installed program came.

Probably you have already installed command-not-found. Did you notice that there was another one package installed — command-not-found-data. This package contains data base (JSON format file) from which information is taken while cnf is working. As repositories are always changing, it is necessary to update information of this base from time to time. That is why this package is rebuilt with actual data once a week and comes to you with other updates.

We hope that your work with console will become more pleasant :)